Product Portfolio

One enterprise platform and two cross-cutting microservices that serve multiple domains: EPA emissions data for researchers, energy sector intelligence for AEs, and custom calculations for analysts across industries.

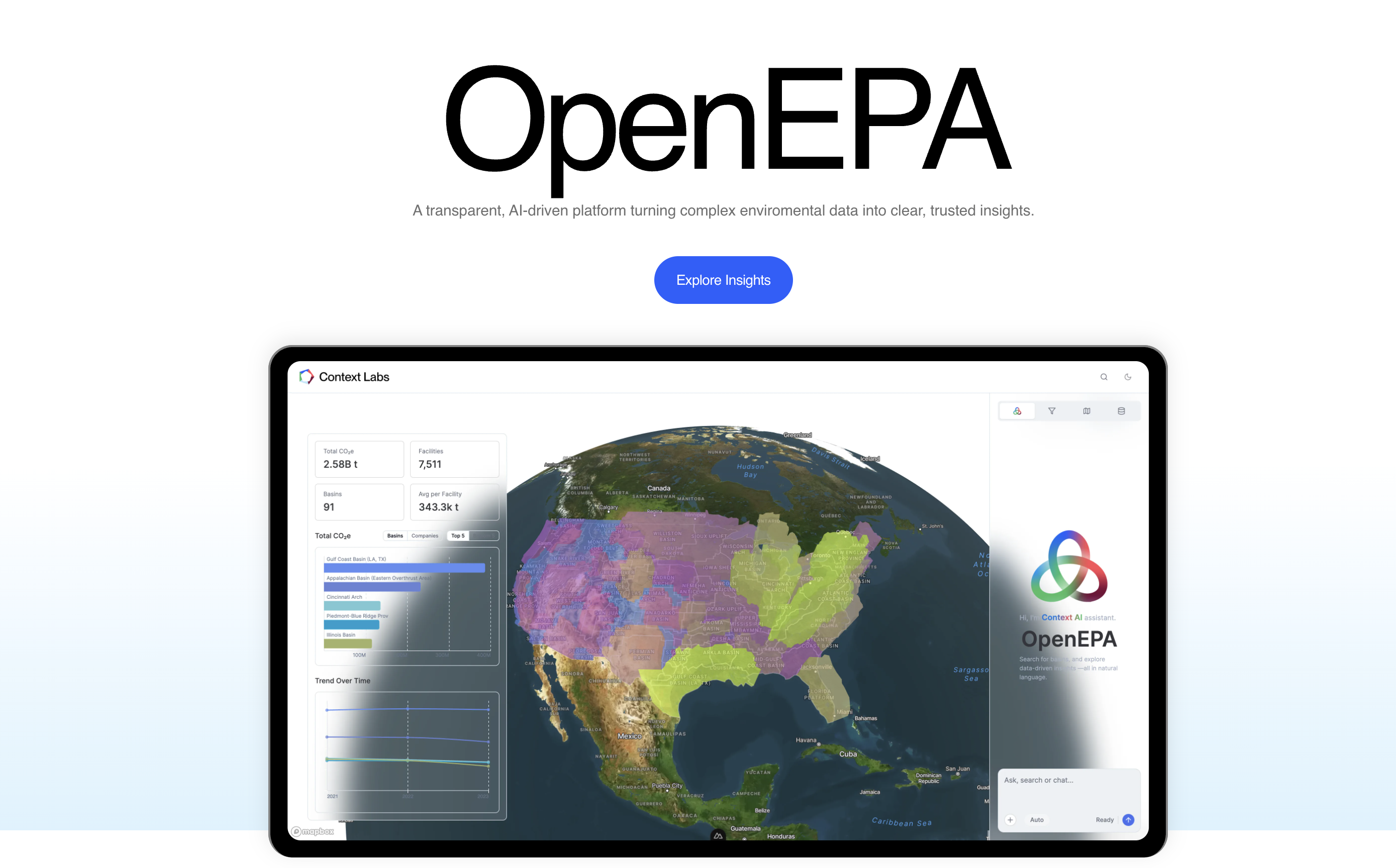

OpenEPA Platform

PilotEPA GHGRP emissions data platform (2010-2024) with AI Q&A, benchmarking views, and verifiable certificates for researchers and journalists.

2,800+ facilities • 15 years data • 99.9% uptime

Context AI Microservice

LiveAI-powered client onboarding and chat intelligence for energy sector. Vue 3 + TypeScript frontend with GPT-4 integration.

2+ hours to 5 min prep • $85M pipeline • 200+ hrs/mo saved

Calculation Editor Microservice

In DevelopmentUniversal calculation engine with formula transparency, methodology docs, and audit trails for verifiable analytics.

Academic-grade reproducibility • Cross-platform